Datacenter Efficiencies Through Innovative Cooling

This article originally appeared on insidehpc.com

Datacenters that are designed for High Performance Computing (HPC) applications are more difficult to design and construct than those that are designed for more basic enterprise applications. Organizations that are creating these datacenters need to be aware of, and design for systems that are expected to run at their maximum or near maximum performance for the lifecycle of the servers. While enterprise datacenters can be designed for less server density and less heat generated per server due to the type of workloads, HPC centers must be designed for higher usage per server. For example, simulations in many domains may run at peak performance (depending on the algorithms) for weeks at a time, while enterprise applications may only need peak performance for short bursts, such as payroll computations.

OPEX is the sum of all of the expenses that an organization will have to pay to keep the servers running. This includes, but is not limited to such items as electricity, cooling (which includes the electricity), construction financing (if new construction was required), and maintenance.

One of the main costs in operating a data center is the cooling of the servers. When servers that are being used for HPC applications are running at full utilization, the CPUs produce more heat, than when waiting for work to be done. While the performance per watt of CPUs has increased dramatically over the past few decades, HPC installations are built to deliver the maximum performance of the system to the end user. Today’s modern two socket high end HPC servers can approach a 1,000 watt requirement. The electricity required for this type of server (while needed for the server in order to run and perform as expected) also includes a significant requirement for the power that is needed in order to cool the servers. CPUs have an envelope of operating temperatures that must be met, or the CPU will likely fail, often with a cascading impact on cluster throughput and reliability.

Datacenter design has focused in recent years on how to place racks and racks of servers in order to isolate and remove the heat that is produced by the servers, mainly the CPUs. Most servers today have been designed to have high speed and redundant fans in the back of the server, so that cool air can be pulled over the CPUS and heat sinks in order to cool them. This results in designing the data center to have hot aisles and cold aisles. For example, two rows of racks of systems may sit back to back. The cooler air from the front of the system is drawn over the hot chips into the hot aisle, and then powerful exhaust fans pull this hot air away from the hot aisles, and cooler air is returned to the cold aisle. Significant expense is required to contain the hot air in the hot aisle and to remove and cool the hot air.

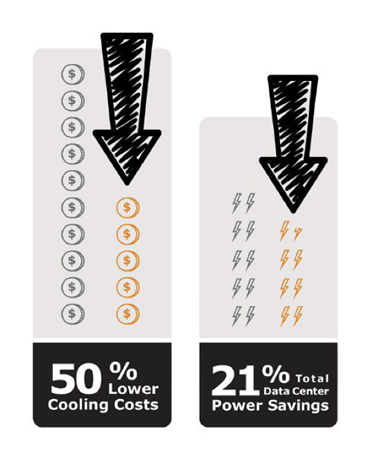

An alternative is to provide cooling of the CPUs much closer to the CPU itself. If a significant reduction in the hot air that is produced is achievable, then less Computer Room Air Conditioning (CRAC) is needed, reducing OPEX expenses. In addition, higher densities of the servers can be achieved, as less hot air is produced by each server into a given space (the hot aisle). The monetary effect of reducing power consumption is directly related to the overall OPEX. For example, reducing the power consumption by 50 %, can lead to a reduction of 20 + % in total data center power savings.

Asetek specializes in liquid cooling systems for data centers, servers, workstations, gaming and high performance PCs. For HPC installations, Asetek RackCDU D2C™ (Direct-to-Chip) applies liquid cooling technology directly on the chip itself. Because liquid is 4,000 times better at storing and transferring heat than air, Asetek’s solutions provide immediate and measurable benefits to large and small data centers alike. The Asetek solution consists of a plate that is attached to the top of the processor and provides cold liquid to the chip itself. The hot liquid is then pumped away from the CPU where the liquid can then be chilled. This reduces almost all of the heat from the airflow of the server, reducing the CRAC requirements, which reduces the OPEX accordingly.

While the exact OPEX savings will vary depending on CPU loads, electricity costs, number of servers, and server density per rack, using Asetek cooling products can significantly reduce the OPEX costs for small and large data centers. Asetek has designed a simple calculator to assist in computing the cost savings. It is well worth investigating innovative chip and server cooling solutions in order to keep an HPC datacenter running and producing results faster than previous generations of systems.

Back to articles

Back to articles